Introduction

Process: A process is a program in execution. A process is not the same as a program; a program is a passive collection of instructions, while a process is the actual execution of those instructions. A process includes the program code and its current activity.

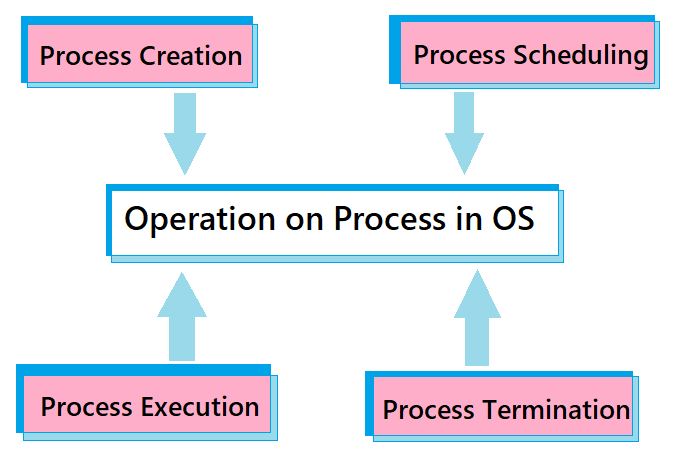

Process Operations: Process operations in an operating system encompass the management and lifecycle of processes, including creation, scheduling, execution, and termination.

- Process Creation: It is the act of generating a new process. This new process is an instance of a program that can execute independently. The creation of a process involves several steps and components, including the allocation of memory, setting up process control blocks (PCBs), and initializing various process attributes.

- Process Scheduling: Scheduling is a crucial function of an operating system that determines the order in which processes access the CPU for execution. Once a process is ready to run, it enters the "ready queue," a data structure that holds all processes waiting to be assigned to the CPU. The scheduler's job is to select a process from this queue based on a specific scheduling algorithm and start its execution. Scheduling algorithms can be classified into different types, such as First-Come, First-Served (FCFS), Shortest Job Next (SJN), Round Robin (RR), and Priority Scheduling.

- Process Execution: Process execution in an operating system involves running a program's instructions on the CPU. When a process is created, it is loaded into memory, and the OS schedules it for execution. The process transitions through various states, such as ready, running, waiting, and terminated, based on its current activity and resource needs. The OS manages these transitions and allocates CPU time to each process according to scheduling policies. During execution, the process's instructions are fetched, decoded, and executed by the CPU. Input/output operations, inter-process communication, and system calls may also occur, requiring OS intervention to manage resources and maintain system stability.

- Process Termination: Process termination is the conclusion of a process's execution in an operating system. This can happen for several reasons, such as the process completing its task, encountering an error, or being explicitly terminated by the user or another process. When a process terminates, the operating system performs several cleanup operations. These include reclaiming any memory and resources allocated to the process, updating process tables, and removing the process's entry from the system's process list. The OS may also notify other processes that were dependent on or communicating with the terminated process. Proper termination is crucial to ensure system stability and to free up resources for other processes.

Need for CPU Scheduling

CPU scheduling is essential in an operating system to ensure efficient and fair use of the CPU among multiple processes. Since the CPU is one of the most critical and limited resources in a computer system, effective scheduling is necessary to optimize its usage. The main reasons for CPU scheduling include:

- Maximizing CPU Utilization: By keeping the CPU busy as much as possible, scheduling helps maximize the overall efficiency and performance of the system.

- Improving Throughput: Scheduling increases the number of processes completed in a given time frame, improving system throughput.

- Reducing Waiting Time: Effective scheduling minimizes the time processes spend waiting in the ready queue, improving system responsiveness and user satisfaction.

- Balancing Load: Scheduling distributes the load evenly across available CPUs in multi-core systems, avoiding bottlenecks and improving overall system performance.

Modes in CPU Scheduling:

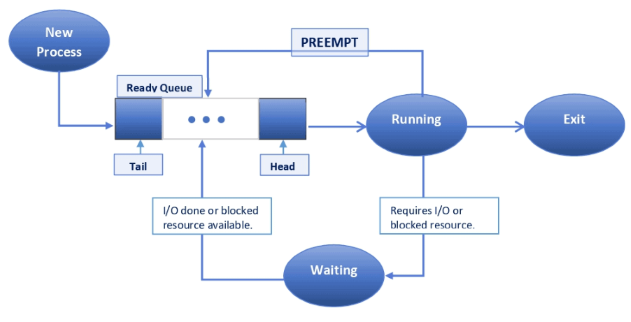

Preemptive Scheduling

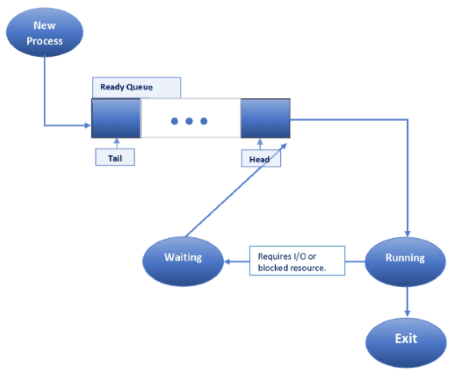

Non-Preemptive Scheduling

Preemptive scheduling allows the operating system to interrupt a currently running process in order to assign the CPU to another process. This is more complex to implement than non-preemptive scheduling but can lead to better system performance and responsiveness.

In non-preemptive scheduling, once a process starts its execution, it runs to completion without being preempted. This approach is simpler and has less overhead but can lead to poor responsiveness for some tasks.

The basic terminologies used in CPU scheduling are as follows:-

- Process ID: The Process ID functions as the name of the process and is typically represented by numbers or by a 'P' followed by numbers, e.g., P0, P1, ..., Pn.

- Arrival Time (AT): The time required for a process to enter the ready queue, or the time when the process is ready to be executed by the CPU, is referred to as Arrival Time. It is always a positive value or zero.

- Burst Time (BT): The time slot required for a process to complete its execution is known as the Burst Time. The Burst Time of a process is always greater than zero.

- Completion Time (CT): The total time required for the CPU to complete a process is known as the Completion Time. This includes the time from when the process arrives in the ready queue until it completes its execution. The Completion Time will always be greater than zero.

- Turnaround Time (TAT): The total time taken from submission of a process to its completion.

Turnaround Time = Completion Time - Arrival Time - Waiting Time (WT): The total time a process spends in the ready queue waiting for CPU time.

Waiting Time = Turnaround Time - Burst Time - Response Time (RT): The time from submission of a request until the first response is produced, not output (for time-sharing environment).

- Ready Queue: The queue where all processes are stored until the CPU is ready to execute them is called the ready queue. This queue is crucial because it helps prevent confusion and conflicts in the CPU when multiple processes are waiting to be executed.

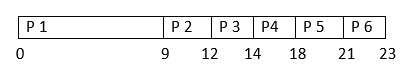

- Gantt Chart: It is the place where all the completed processes are stored. This is useful for calculating metrics such as Waiting Time, Completion Time, and Turnaround Time.

- Throughput: The number of processes that complete their execution per time unit.

Types of CPU Scheduling Algorithms:

First-Come, First-Served (FCFS): Processes are executed in the order they arrive.

- Minimizes average waiting time.

- Efficient for batch processing.

- Difficult to predict the burst time of processes.

- Can lead to starvation of longer processes.

Shortest Job Next (SJN) / Shortest Job First (SJF): Selects the process with the shortest burst time.

- Simple to implement and understand.

- No starvation: every process gets its turn.

- Can lead to the convoy effect, where shorter processes wait for a long process to complete.

- Poor average waiting time for a mix of short and long processes.

Round Robin (RR): Each process is assigned a fixed time slice (quantum) and is executed in a cyclic order.

- Fairness: Each process gets equal CPU time.

- Good for time-sharing systems.

- Performance depends on the length of the time quantum.

- High context switching overhead if the time quantum is too small.

Priority Scheduling: Priority scheduling is a method where processes are assigned priorities, and the process with the highest priority is selected for execution. Priority can be based on various factors such as importance, resource requirements, or user-defined criteria. Priority scheduling can be implemented in two ways: non-preemptive and preemptive

Non-Preemptive Priority Scheduling: In non-preemptive priority scheduling, once a process starts its execution, it runs to completion or until it voluntarily relinquishes the CPU (e.g., waiting for I/O). A new process with a higher priority does not affect the currently running process.

- Simple to implement.

- No additional overhead from context switching during process execution.

- Lower priority processes can experience significant delays if there are many higher priority processes.

- Can lead to starvation of low-priority processes if higher-priority processes keep arriving.

Preemptive Priority Scheduling: In preemptive priority scheduling, a running process can be interrupted if a new process with a higher priority arrives. The higher priority process preempts the current process, which is then placed back into the ready queue.

- High responsiveness: High-priority processes get CPU time as soon as they arrive.

- Better suited for real-time systems where urgent tasks need immediate attention.

- Higher complexity due to the need to handle preemptions and context switches.

- Increased overhead from frequent context switches.

Convoy Effect: FCFS algorithm is non-preemptive in nature, that is, once CPU time has been allocated to a process, other processes can get CPU time only after the current process has finished. This property of FCFS scheduling leads to the situation called Convoy Effect. Suppose there is one CPU-intensive (large burst time) process in the ready queue, and several other processes with relatively fewer burst times but are Input/Output (I/O) bound (Need I/O operations frequently).

Example: Consider the following six processes with the arrival time and CPU burst time given in milliseconds:

| Process ID | Arrival Time (ms) | Burst Time (ms) |

|---|---|---|

| P1 | 0 | 9 |

| P2 | 1 | 3 |

| P3 | 1 | 2 |

| P4 | 1 | 4 |

| P5 | 2 | 3 |

| P6 | 3 | 2 |

Execution Order: P1 -> P2 -> P3 -> P4 -> P5 -> P6

Advantages :

Disadvantages :

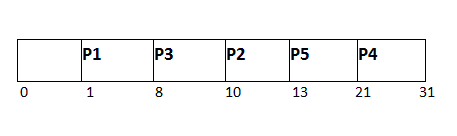

Example: Consider the following five processes with the arrival time and CPU burst time given in milliseconds:

| Process ID | Arrival Time (ms) | Burst Time (ms) |

|---|---|---|

| P1 | 1 | 7 |

| P2 | 3 | 3 |

| P3 | 6 | 2 |

| P4 | 7 | 10 |

| P5 | 9 | 8 |

Execution Order: P1 -> P3 -> P2 -> P5 -> P4

Advantages :

Disadvantages :

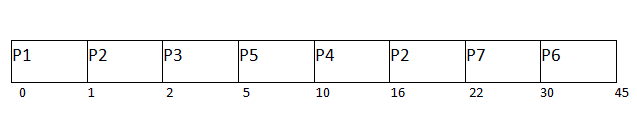

Example: Consider the following six processes with the arrival time and CPU burst time given in milliseconds:

| Process ID | Arrival Time (ms) | Burst Time (ms) |

|---|---|---|

| P1 | 0 | 5 |

| P2 | 1 | 6 |

| P3 | 2 | 3 |

| P4 | 3 | 1 |

| P5 | 4 | 5 |

| P6 | 6 | 4 |

| Time Quantum: 4 ms | ||

Execution Order: P1 -> P2 -> P3 -> P4 -> P5 -> P1 -> P6 -> P2 -> P5

Advantages :

Disadvantages :

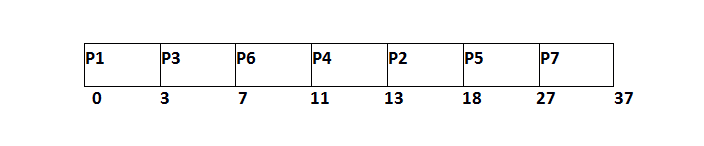

Example: Consider the following seven processes, with their priorities, arrival time and CPU burst time given in milliseconds:

| Process ID | Priority (lower is higher) | Arrival Time (ms) | Burst Time (ms) |

|---|---|---|---|

| P1 | 2 | 0 | 3 |

| P2 | 6 | 2 | 5 |

| P3 | 3 | 1 | 4 |

| P4 | 5 | 4 | 2 |

| P5 | 7 | 6 | 9 |

| P6 | 4 | 5 | 4 |

| P7 | 10 | 7 | 10 |

Execution Order: P1 -> P3 -> P6 -> P4 -> P2 -> P5 -> P7

Advantages :

Disadvantages :

Example: Consider the following seven processes, with their priorities, arrival time and CPU burst time given in milliseconds:

| Process ID | Priority (lower is higher) | Arrival Time (ms) | Burst Time (ms) |

|---|---|---|---|

| P1 | 2 | 0 | 1 |

| P2 | 6 | 1 | 7 |

| P3 | 3 | 2 | 3 |

| P4 | 5 | 3 | 6 |

| P5 | 4 | 4 | 5 |

| P6 | 10 | 5 | 15 |

| P7 | 9 | 15 | 8 |

Execution Order: P1 -> P2 -> P3 -> P5 -> P4 -> P2 -> P7 -> P6

Advantages :

Disadvantages :

Applications

Time-Sharing Systems: Ensures fair CPU allocation among multiple users for improved system responsiveness.

Real-Time Systems: Manages critical tasks to meet strict timing constraints and deadlines.

Web Servers: Efficiently handles multiple incoming requests to minimize response times.

Multi-core Processors:Distributes threads across cores to enhance parallelism and performance.